- The silent enablers—EDA tools—capture inevitable value:: Every AI chip, from Nvidia's H100 to custom hyperscaler ASICs, requires electronic design automation software, creating a toll-booth business model with 90%+ gross margins that scales with ecosystem growth regardless of winner selection.

- CPU renaissance challenges GPU hegemony:: AMD's data center momentum, driven by EPYC server processors and MI300 AI accelerators, demonstrates that Nvidia's dominance faces credible competition from compute generalists with established enterprise relationships and competitive price-performance ratios.

- Concentration risk at unprecedented levels:: Nvidia commands 92% market share in AI accelerators, while three companies (Synopsys, Cadence, Siemens) control 75% of chip design software, creating single points of failure that concern enterprise buyers and investors alike.

- The CapEx supercycle faces sustainability questions:: Hyperscalers' combined AI infrastructure spending reached $210 billion in 2024, yet clear monetization pathways remain elusive for most applications beyond search enhancement and coding assistance.

- Vertical integration is accelerating:: Major cloud providers are designing custom silicon (Google's TPUs, Amazon's Trainium, Microsoft's Maia) to reduce dependence on Nvidia, potentially compressing hardware margins by 2026-2027.

The AI Gold Rush: Navigating the Value Chain as Valuations Hit Stratospheric Heights

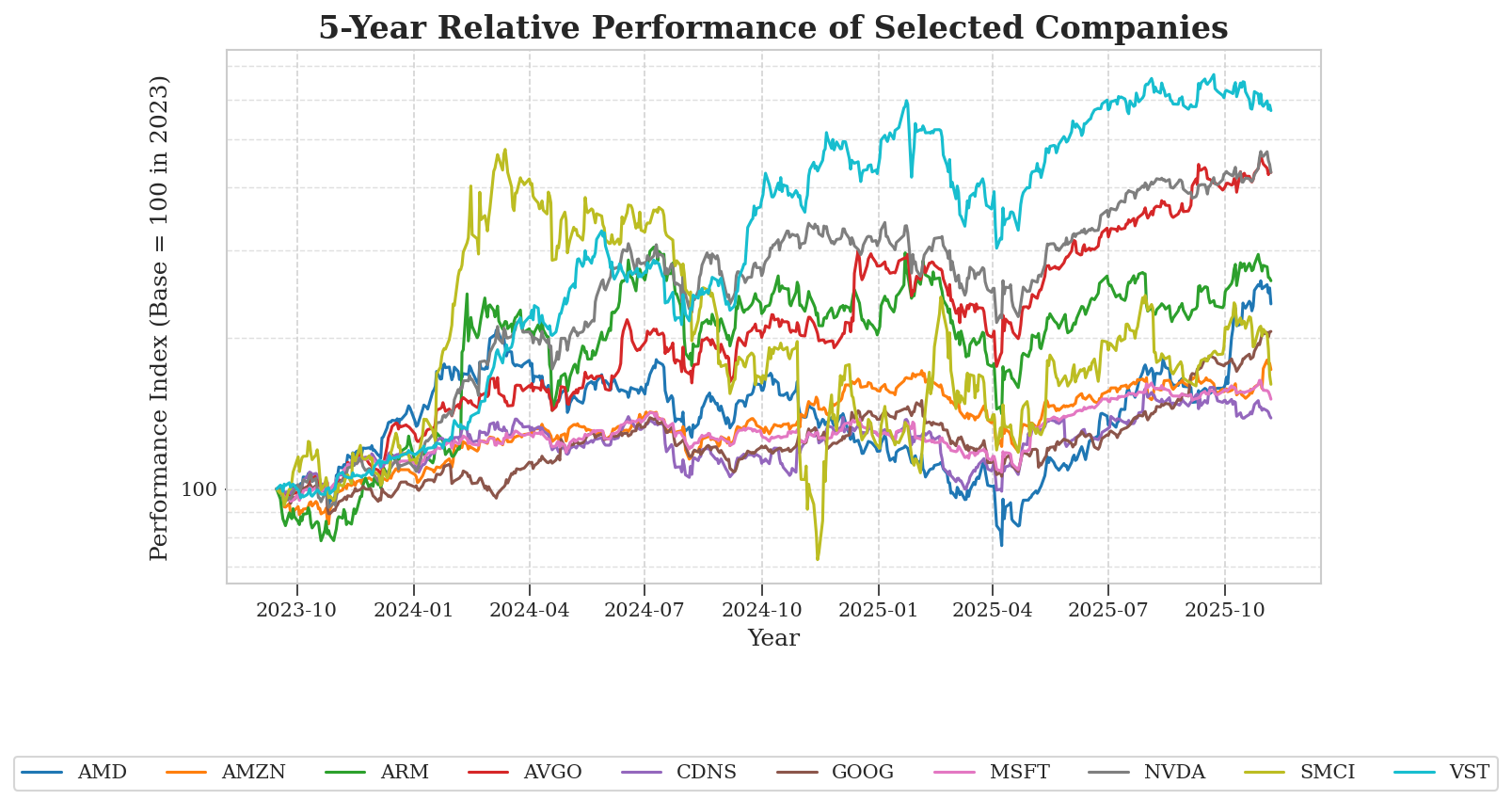

The artificial intelligence boom has created one of the most spectacular wealth creation events in financial markets history, with the AI ecosystem adding over $5 trillion in market capitalization since early 2023. Yet as we approach 2026, investors face a critical inflection point. Nvidia trades at 50x forward earnings despite decelerating revenue growth, while hyperscalers have committed to $300+ billion in 2025 CapEx alone. The AI value chain spans from electronic design automation tools enabling chip creation, through utilities providing gigawatts of power, to semiconductor designers and ultimately the model builders deploying these systems.

This article dissects the entire ecosystem to determine whether current valuations reflect sustainable competitive advantages or represent a speculative peak. We analyze ten companies across the value chain—from Cadence's design tools to Vistra's power generation, AMD and Nvidia's competing accelerators, and Microsoft's enterprise AI monetization—to identify where profits will ultimately accrue.

The critical question for 2026 is not whether AI transforms the economy—it will—but which companies capture value at reasonable valuations. Current market pricing suggests investors should favor infrastructure monopolies with pricing power over hardware suppliers facing inevitable margin compression.

Author: Analystock.ai

The AI Value Chain Architecture

The Invisible Foundation: Electronic Design Automation

Before any AI chip exists physically, it must be designed using electronic design automation (EDA) software—the industry's most overlooked yet essential component. Cadence Design Systems (CDNS) provides the tools that enable engineers to design, verify, and validate complex semiconductors. The company's digital and signoff tools are used in virtually every advanced chip design, from Nvidia's GPUs to AMD's accelerators to hyperscaler custom silicon.

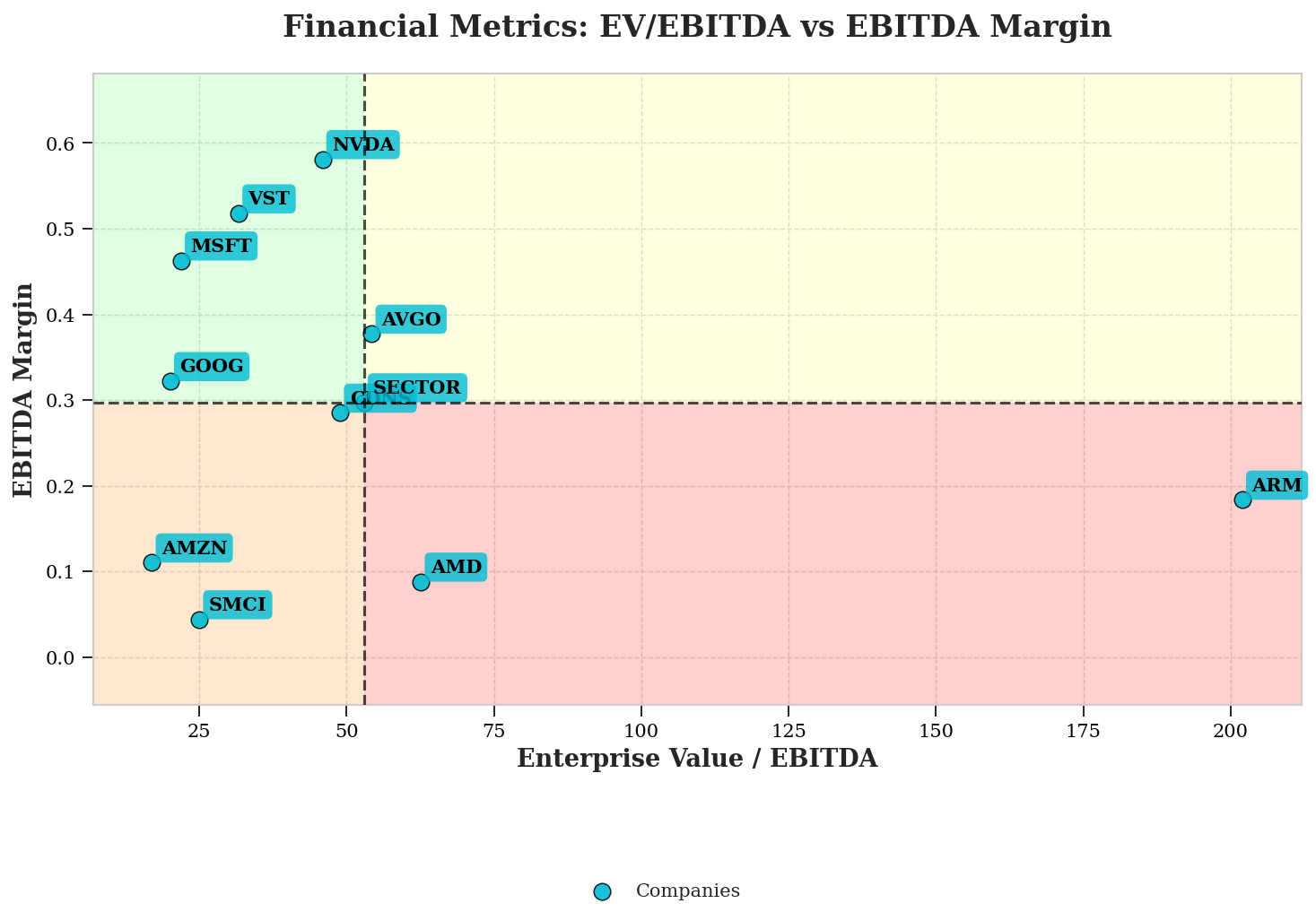

Cadence's revenue reached $4.1 billion in fiscal 2023, growing 14% year-over-year, with AI-related design activity accelerating demand. Management disclosed that AI-related chip designs now represent over 40% of their advanced node design starts, up from 15% in 2022. The EDA business model exhibits extraordinary economics: 92% gross margins, minimal capital requirements, and sticky customer relationships spanning decades. Once engineering teams standardize on Cadence's toolchains, switching costs prove prohibitive—migrating would require retraining hundreds of engineers and validating new workflows.

The AI chip complexity explosion drives EDA growth. A cutting-edge 5nm AI accelerator contains 50-80 billion transistors versus 10 billion in previous generation server chips. Verification requires 10x more simulation compute for AI chips than traditional designs. Cadence's Palladium Z2 emulation platform, priced at $15-20 million per system, has multi-year backlogs as every major chip designer requires multiple units for AI projects.

The Power Providers: Baseload Generation for 24/7 Demand

AI data centers require uninterrupted 24/7 power delivery, creating renewed demand for baseload generation capacity. Vistra Corp (VST) operates 41GW of capacity including nuclear and natural gas assets. The company's stock appreciated 285% in 2024 as investors recognized that AI data centers require baseload power that intermittent renewables cannot fully provide. Microsoft's September 2024 deal to restart Three Mile Island's Unit 1 reactor exemplifies this trend, with reported pricing at $95/MWh versus $40/MWh market averages.

The power bottleneck has emerged as the binding constraint on AI expansion. Virginia's Loudoun County imposed data center moratoriums due to grid capacity limits. Wait times for new high-capacity grid connections now extend 4-6 years in premium markets. Vistra's competitive advantage lies in existing generation assets requiring no permitting delays, with natural gas plants now running continuously for data center loads at 80%+ capacity factors versus historical 10-20%.

Semiconductor Design: The Duopoly Under Pressure

Nvidia's dominance remains historically unprecedented—92% market share in AI training and 85% in inference deployments. In fiscal Q3 2025, Nvidia generated $30.8 billion in data center revenue with 74% gross margins. The company's CUDA software ecosystem, with over 4 million developers, creates switching costs extending beyond hardware performance.

Yet AMD has emerged as the credible alternative. The MI300X accelerator achieves competitive performance to H100 for large language model inference while offering 40% better memory bandwidth and aggressive pricing ($10,000-12,000 versus H100 at $25,000-30,000). AMD's data center segment revenue reached $3.5 billion in Q3 2024, growing 122% year-over-year. Management guided to $5 billion in MI300 series revenue for 2024, with Microsoft, Meta, and Oracle as major customers.

AMD's unified memory architecture in MI300X—192GB HBM3 versus H100's 80GB—enables serving multiple concurrent users per accelerator, critical for inference economics. Meta's disclosure that MI300X powers Llama inference workloads validates this architectural choice. The company's EPYC server CPUs power 30% of servers, creating procurement familiarity that reduces adoption friction for accelerators.

Broadcom: The Custom Silicon Enabler

Broadcom (AVGO) has established itself as the hyperscaler custom ASIC provider, designing bespoke AI chips for Google (TPU v5) and Meta. Management disclosed $12.2 billion in AI-related semiconductor revenue for fiscal 2024, with custom accelerators achieving 35% operating margins. Google's TPU v5e delivers 4x better inference price-performance than H100 for specific workloads. Meta's MTIA v2 optimizes for recommendation systems, achieving 50% lower TCO than commercial alternatives.

Broadcom's networking business provides complementary AI exposure. The company's Tomahawk 5 switches enable 51.2Tbps bandwidth for GPU interconnect fabrics. Infrastructure solutions generated $4.3 billion in Q3 2024 revenue with 65% gross margins and minimal competition. Management projects AI-related semiconductor revenue reaching $60 billion cumulatively through fiscal 2027.

Arm Holdings: The Architectural Revolution

Arm Holdings (ARM) provides the instruction set architecture increasingly deployed in AI infrastructure. Amazon's Graviton4 processors power AWS inference workloads with 40% better price-performance than x86 alternatives. Microsoft's Azure Cobalt 100 launched in Q4 2024 for AI inference optimization. Arm reported 96% year-over-year growth in royalty revenue from cloud infrastructure in Q2 FY2025, with gross margins exceeding 95%.

The architectural advantages for AI inference favor Arm: superior power efficiency (critical for TCO), flexible core configurations, and established dominance in edge computing where 90% of AI inference ultimately occurs. Nvidia's Grace CPU, based on Arm Neoverse V2, validates the architecture's data center viability. When paired with Hopper GPUs in GH200, the combination delivers 10x bandwidth improvement over PCIe-connected alternatives.

Cloud Platforms and Model Builders

Microsoft (MSFT) has emerged as the AI monetization leader. GitHub Copilot surpassed $1 billion annual recurring revenue in Q4 2024, while Microsoft 365 Copilot reached 70% Fortune 500 adoption at $30/user/month. Azure AI services revenue grew 186% year-over-year in Q2 FY2025, capturing 35% of enterprise AI workload spending. Yet the company's fiscal 2025 CapEx guidance of $80 billion represents 24% of projected revenue, requiring sustained spending through 2026-2027 for positive returns.

Alphabet (GOOGL) faces existential challenges from AI disrupting search advertising. Google Cloud AI revenue grew 35% to $11.4 billion in Q3 2024, but core search faces margin pressure from AI-enhanced results reducing ad impressions. The company's $45 billion 2025 AI CapEx represents defensive response to preserve search dominance.

Amazon (AMZN) leverages AWS's 42% cloud market share, emphasizing tools for customers to build applications rather than competing as model builder. This positioning generated $9.3 billion in Q3 2024 AI revenue with less margin pressure than competitors, though AWS operating margins of 38% reflect infrastructure leverage.

Ecosystem Perspectives and Sustainability Analysis

The CapEx Cycle: Peak or Plateau?

Combined hyperscaler spending reached $210 billion in 2024, with 2025 projections exceeding $300 billion. AI-specific infrastructure accounts for 60-70% of spending, or $180-210 billion. Historical precedents provide concerning context—the telecom bubble saw infrastructure spending surge to $150 billion annually before collapsing 80% as overcapacity materialized.

Early monetization data suggests caution. OpenAI's ChatGPT Plus generates $2.4 billion annual revenue while reportedly spending $255 million annually on inference compute alone, implying negative unit economics that improve only with massive scale. If the industry's flagship application struggles with profitability, questions arise about enterprise use cases with lower willingness to pay.

EDA Tools: Inevitable Growth Regardless of Chip Winner

Cadence's position as ecosystem enabler provides unique insulation from competitive uncertainties. Whether Nvidia maintains dominance, AMD captures share, or hyperscaler custom silicon proliferates, every scenario requires advanced EDA tools. As chips scale to 3nm and 2nm through 2025-2026, design costs increase exponentially—a 3nm AI chip costs $500-700 million versus $300 million for 5nm, with verification consuming 60% of budgets.

Industry consolidation strengthens EDA incumbents. The three-company oligopoly (Cadence, Synopsys, Siemens) controlling 75% of the market exhibits pricing power absent in more competitive segments. Cadence's average selling prices increased 8-10% annually from 2021-2024 despite general technology deflation. The subscription model transition creates recurring revenue streams generating 92% gross margins with 35% operating margins.

AMD's Strategic Window and Execution Risk

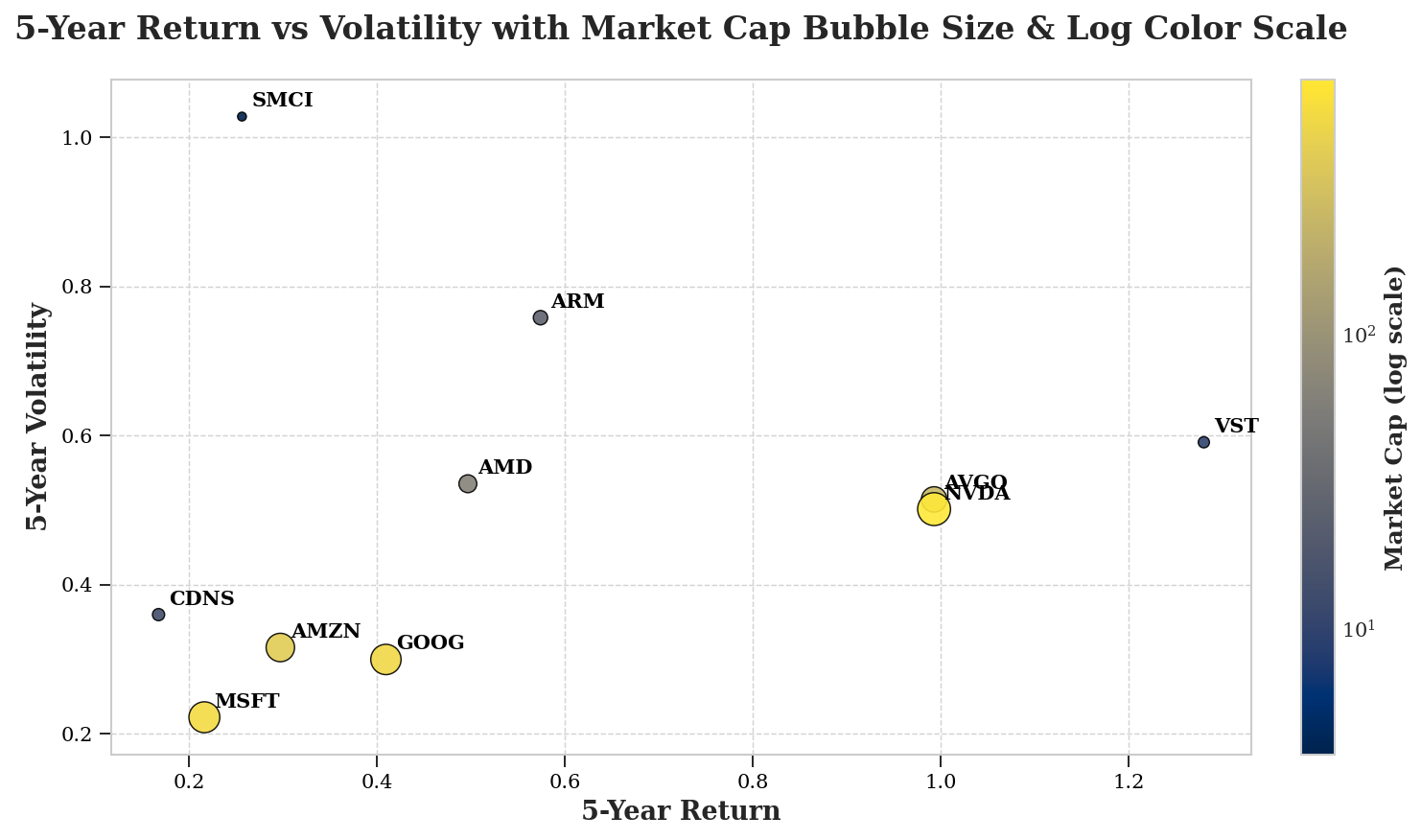

AMD's MI300 opportunity represents potentially transformative inflection, yet execution risks loom. Prior GPU attempts failed to dislodge Nvidia, and ROCm software remains immature versus CUDA's decade-plus lead. Management's $5 billion MI300 revenue guidance represents just 15% of Nvidia's data center revenue.

The strategic window may be narrow. Nvidia's Blackwell architecture delivers 2.5x performance improvement, potentially contracting AMD's competitiveness window. Yet hyperscaler demand for alternatives creates unprecedented opportunity. Meta explicitly seeks 'two viable suppliers for every critical component.' If AMD captures 15-20% of AI accelerator TAM by 2026, this represents $30-40 billion potential revenue. The CPU franchise provides resilience—EPYC generates $7-8 billion annually with 31% market share, ensuring relevance regardless of GPU outcomes.

The Inference Inflection and Architectural Shifts

AI workload composition is shifting from training to inference. Nvidia disclosed inference now represents 40% of data center revenue versus 25% in early 2023. Inference workloads favor different architectures: lower precision, batch processing efficiency, and power efficiency over raw training performance.v

This transition benefits inference-optimized solutions. Broadcom's custom ASICs deliver 2.5x better TCO for inference versus Nvidia GPUs. Arm architecture dominates mobile and edge inference with 250 billion chips shipped cumulatively. AMD's 192GB memory capacity enables superior multi-user serving for inference. However, the inference market may prove more competitive—CPUs offer competent solutions at fraction of GPU costs for many workloads, while startups like Groq achieve 500 tokens/second versus 100-150 for H100s.

Energy Constraints as the Next Bottleneck

Power availability has emerged as the binding constraint. Vistra's nuclear and natural gas assets now command 3-4x historical pricing in hyperscaler agreements, with forward revenue visibility through 2030. Yet hyperscalers' own power investments—Microsoft's $1.6 billion Constellation partnership, Amazon's $650 million X-energy investment—signal vertical integration intent. If hyperscalers develop 10-15GW owned generation by 2028, Vistra's scarcity premium evaporates.

Regulatory dynamics create uncertainty. Microsoft's 2024 emissions increased 30% from 2020 baseline despite carbon-neutral pledges. Google's emissions rose 48%. If regulators prioritize decarbonization over data center growth, deployment constraints may limit AI scaling regardless of chip availability.

Custom Silicon and the Threat to Nvidia's Margins

Every major hyperscaler develops custom AI accelerators. Broadcom's custom ASIC revenue trajectory—from $2 billion in fiscal 2022 to $12.2 billion in fiscal 2024—illustrates the threat magnitude. If hyperscalers allocate 20% of AI chip spending to custom solutions by 2026, this represents $30-40 billion shifting from Nvidia.

Nvidia's counter-strategy emphasizes software lock-in and rapid cadence. Annual GPU releases force custom ASIC developers into perpetual catch-up. Yet historical semiconductor precedents suggest 70%+ gross margins attract competition that eventually compresses profitability—Intel's CPU business and Cisco's networking both experienced this pattern.

Competitive Positioning and Investment Implications

The Definitive Winners: Hyperscalers with Distribution

Microsoft appears best positioned for 2026. The enterprise software installed base creates natural AI monetization with positive unit economics—Microsoft 365 Copilot's $30/month represents 15% incremental revenue from existing customers. Azure's 35% enterprise AI workload share provides differentiation. At 32x forward P/E, valuation appears justified by AI-driven productivity software margin expansion.

Amazon's AWS demonstrates leverage despite infrastructure buildout—operating margins expanded from 29% to 38% in Q3 2024. Yet $100 billion 2025 CapEx raises return questions. Google faces greatest existential threat—search's 60% operating margins cannot be replicated if AI reduces ad impressions. At 22x forward P/E, the stock may underestimate search disruption risk.

The Semiconductor Competition: AMD's Asymmetric Opportunity

AMD presents compelling risk-reward at 42x forward earnings. If MI300 scales to $15-20 billion by 2026 (plausible given hyperscaler diversification mandates), AMD's data center segment could generate $25-30 billion revenue with 50%+ gross margins. The thesis hinges on three catalysts: MI300 proving competitive performance, EPYC share gains to 35-40% market share, and inference shift favoring memory architecture. If two of three materialize, AMD justifies current valuation with 20-30% appreciation potential.

Downside risks center on execution. AMD's GPU roadmap historically suffered delays. Any MI350/MI400 setbacks enable Nvidia to widen gaps. For conservative investors, AMD offers diversified data center exposure: market-leading CPUs generating durable cash flows, AI accelerator optionality, and embedded/gaming businesses stabilizing through cycles.

Nvidia's Kingdom: Durable but Margin-Compressed Future

Nvidia's fiscal 2025 revenue projection of $120+ billion represents 90% growth despite large numbers constraints. Gross margins sustaining above 70% defy norms. Blackwell delivers 2.5x performance improvement, maintaining technical leadership. Yet the company trades at 48x forward earnings with decelerating growth (260% to 94% YoY). Hyperscaler custom silicon explicitly targets dependence reduction. Export restrictions limit China to 12-15% of revenue versus historical 25%.

Nvidia resembles Cisco circa 2000: dominant incumbent facing inevitable margin compression, yet positioned to capture largest absolute opportunity. The stock may deliver moderate returns but appears vulnerable to multiple compression as growth normalizes to 20-30%.

Broadcom and Arm: Asymmetric Plays on Ecosystem Transformation

Broadcom's custom ASIC revenue scaling to $60 billion by fiscal 2027 implies $180 billion market value contribution at industry multiples versus $640 billion current market cap. At 32x forward earnings, Broadcom trades at discount to Nvidia despite superior positioning for inference and custom silicon transitions. The company's networking segment generating $4.3 billion quarterly with minimal competition adds defensibility. Risk is hyperscaler monopsony power compressing margins.

Arm trades at 90x forward earnings, pricing aggressive growth. Yet the thesis remains compelling: every hyperscaler CPU project generates recurring royalties at 95%+ gross margins. If Arm captures 40-50% data center CPU share by 2027 versus current 15-20%, royalty revenue could reach $6-8 billion from current $3.5 billion. Power efficiency advantages—30-40% lower versus x86—justify adoption for TCO-sensitive operators. Automotive, edge, and IoT exposure provides diversification, with $10 billion automotive contract value ensuring visibility through 2028-2030.

The EDA Oligopoly: Cadence's Durable Compounding

Cadence represents highest-quality asset in AI value chain from business model perspective: 35-40% market share, minimal capital requirements (CapEx <3% revenue), demonstrated pricing power, and decade-long switching costs. At 50x forward earnings, the multiple reflects extraordinary economics—rule-of-40 score exceeds 55, ranking top decile of software companies. Operating margins expanded from 28% to 35% despite R&D investment.

The AI chip pipeline provides unprecedented visibility. With 650+ designs in progress and 18-36 month cycles, 2026-2027 revenue is substantially pre-determined. Every process node shrink drives 30-40% higher design costs. The digital twin strategy expands addressable market—system-level simulation of data centers represents $500 million annual opportunity, potentially adding $1-2 billion revenue by 2027-2028.

For quality-focused investors, Cadence merits core allocation. The company should compound at 15-20% annually for the next decade, with downside protection from subscription predictability and capital-light model enabling consistent buybacks.

Vistra's Tactical Opportunity and Strategic Risks

Vistra presents tactical rather than strategic investment. Baseload capacity addresses genuine near-term constraint, with valuations at 32x P/E substantially exceeding historical 8-12x multiples. Regulatory barriers ensure existing capacity commands premium pricing through 2027-2028, providing $8-10 billion incremental cumulative cash flow. However, hyperscalers' vertical integration threatens to commoditize electricity supply. If hyperscalers develop 10-15GW owned capacity by 2028-2030, merchant pricing reverts toward $40-50/MWh from current $90-100/MWh, compressing 2028+ earnings 30-40%.

For tactical traders, Vistra offers 6-18 month appreciation as power constraints intensify. The company's $1.8 billion nine-month free cash flow supports $3-4 billion annual buybacks. However, strategic investors should recognize mean-reversion risks, suggesting profit-taking rather than accumulation.

Portfolio Construction: Barbell Strategy for 2026

The AI ecosystem's valuation extremes suggest barbell construction combining high-quality infrastructure monopolies with selective growth opportunities.

- Core holdings (50-60%) : Microsoft (enterprise monetization), Cadence (EDA oligopoly), Broadcom (custom silicon enabler). These exhibit sustainable advantages, clear monetization, and reasonable valuations (30-35x for Microsoft/Broadcom, 50x for Cadence justified by superior economics)

- Growth/Opportunistic (30-40%) : AMD (asymmetric AI accelerator opportunity with CPU protection), Arm (architectural transition with capital-light model), Amazon (AWS infrastructure scale).

- Tactical (10-20%) : Vistra (near-term power scarcity with defined exit), Nvidia (retain existing positions, avoid new deployment).

- Avoid entirely : Server hardware suppliers (commodity businesses with margin compression), pure-play AI software without distribution.

Summary

The AI ecosystem's late-2024 valuation extremes reflect both transformation potential and speculative excess. Our comprehensive analysis reveals that definitive winners combine sustainable competitive advantages with clear monetization pathways. Cadence emerges as highest-quality asset—every AI chip requires EDA tools regardless of outcomes, 92% gross margins and subscription model generate predictable cash flows, and chip complexity drives inevitable growth. At 50x forward earnings, valuation reflects quality but the business warrants premium pricing.

Microsoft remains enterprise monetization leader with $10 billion quarterly AI revenue demonstrating real demand, though $80 billion annual CapEx raises return questions. Within semiconductors, AMD presents most compelling asymmetric opportunity—MI300 accelerators offer credible Nvidia alternatives while EPYC CPUs provide relationship foundation and downside protection. Broadcom's custom ASIC business scaling toward $20 billion annually positions the company as primary Nvidia alternative with superior valuation. Arm provides pure-play architectural transition exposure with 95% gross margin royalty model leveraging power efficiency advantages. Vistra represents tactical 6-18 month opportunity on power scarcity but faces commoditization risk from hyperscaler vertical integration.

The 2026 verdict: The AI theme remains investable but requires selectivity. Favor quality infrastructure (Cadence, Microsoft, Broadcom) and asymmetric growth (AMD, Arm) while avoiding Nvidia at current valuations and commodity hardware suppliers. Expected returns favor infrastructure monopolies with pricing power (15-25% annually) over hardware suppliers facing margin compression. The gold rush continues, but real wealth flows to those providing indispensable tools—Cadence's design software, Microsoft's enterprise distribution, Broadcom's custom silicon—rather than those staking claims at stratospheric valuations.